3 Things Make or Break your Conversational AI Experience

Just about everyone has an IVR horror story to share. Being forced to listen to repetitive menus. Getting stuck in an endless cycle of trying to navigate through options that don’t meet your needs. Wanting to throw the phone against the wall in frustration after hearing an automated voice announce, “I’m sorry, but I didn’t get your response.”

Over the past few years, the evolution of conversational AI and delivery over the cloud has enabled businesses to go far beyond the boundaries of traditional IVRs. Companies of all kinds are automating more conversations than ever before while maintaining…and often improving…the customer experience with AI-powered virtual agents for voice that can also be used in digital channels like chat and text.

After deploying and managing the AI-powered CX for more than 100 brands, we can quickly point to 3 factors that dominate the success or failure of every conversational AI experience. If you get these 3 areas wrong, it doesn’t matter what else you are doing right.

1st Factor – Choosing the Right Speech Rec Approach

Speech rec over telephony is really hard to do well. That might not surprise anyone considering the poor experiences we’ve all had. But you may not have understood why and what the bleeding edge of AI is doing to solve for it.

When you speak directly into your phone or home device, that’s a high def experience. The speech rec is so good because it’s capturing all the highs and lows at the device level which makes it easy to distinguish utterances and relate those to letters, syllables, and words. But the moment you call into a customer service line and those sound waves travel over outdated telephony infrastructure, the resolution is reduced to 8K in most cases – it’s cutting out all the highs and lows by more than half. And, if that wasn’t bad enough, it adds noise. This is why conversational AI over telephony is a really difficult challenge. It’s also why these same transcription-based engines like a Google or Amazon don’t deliver a good enough customer experience at the contact center level, because they are now 50% less accurate. So you have to have AI that is purpose-built for this kind of challenge, which means training models with domain-specific telephony audio, and, more importantly, the ability to customize language acoustic models on a question by question basis to account for the narrow scope of expected answers. It’s that kind of custom development work that moves accuracy rates into 90%.

2nd Factor – Human-centric Design

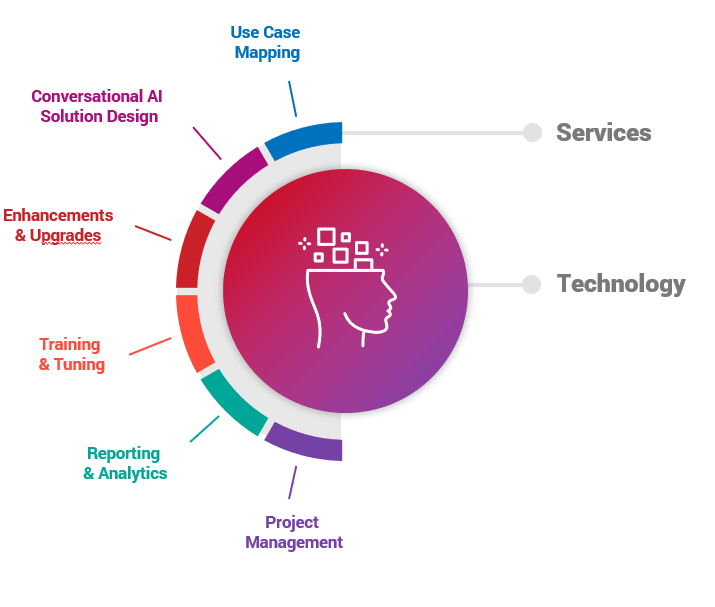

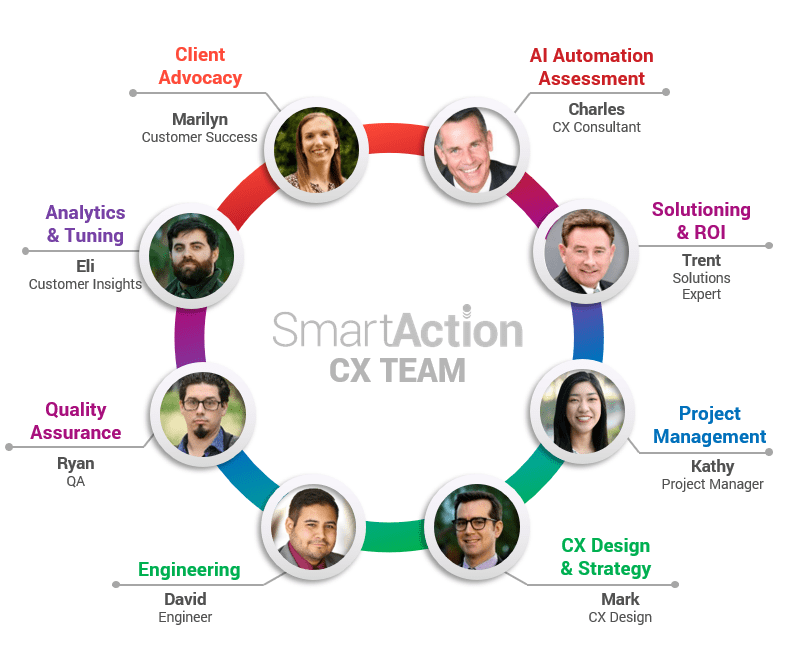

If you were to attempt to do voice automation on your own, particularly natural language automation, that outer ring in the diagram is all the jobs or functions where you need experts in their field doing that role. Ultimately, this is why we deliver our technology as-a-service because of the complexity involved in delivering a great voice experience and operating it over time.

The big thing to understand is that Conversational AI is not an off-the-shelf product – it’s merely a tool set. It’s a solution that requires ongoing care and feeding. In fact, once you “go live,” you’ve only started.

While 8 different teams are listed above, let’s take just one example in the area of CX design.

Here is a call into emergency roadside assistance prior to the CX design work from Hollywood script writers.

Here is that same call after it went through our CX design team. You’ll see tension was relieved at the 14-second mark and the call ended in less time than it took for the first call to get to the problem statement.

3rd Factor – Iterative Process of Improvement

To do voice well, you need real human bodies who are experts in AI-powered CX and committed to the ongoing process of perpetual improvement. This means obsessing over the CX and scrutinizing containment across every interaction to identify points of friction to iterate and improve week by week.

You need big data scientists deploying big data tools like Splunk and Power BI who then work closely with customer insight managers and customer success managers to extract actionable insights.

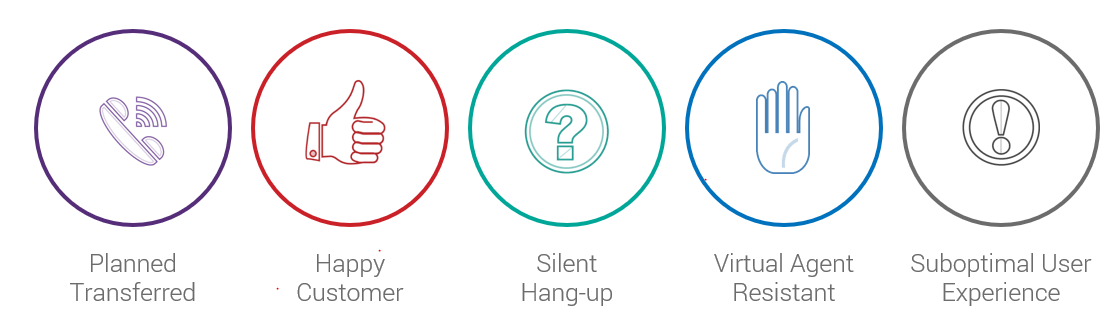

They monitor various end states and outcomes. By focusing on select outcomes, breadcrumbs can be followed back to a point in a call that needs to be QA’d to identify the actions that must be taken like tunning grammars, changing the nature of conversation flow, identify new data sources, etc. to really chase the frictionless experience.

To hear and see the AI experience, go to www.smartaction.ai/listen for OnDemand examples or even schedule a demo to try the experience for yourself first-hand.